Table of Contents

Neural Engine vs Hexagon NPU vs MediaTek APU comparison is one of the most important in modern mobile AI hardware. These three accelerators define how efficiently smartphones execute on-device machine learning workloads within tight power and memory limits. Apple’s Neural Engine is a tightly integrated, general-purpose AI accelerator optimized for its ecosystem, delivering high performance across diverse on-device machine learning tasks. Qualcomm’s Hexagon NPU, an evolved DSP with dedicated tensor cores, excels in heterogeneous computing for power-efficient, always-on AI features. MediaTek’s APU offers a multi-core, dedicated solution focused on balanced performance and efficiency across a wide range of Android AI applications. Each architecture reflects distinct design philosophies tailored to its respective platform strategy for accelerating AI inference.

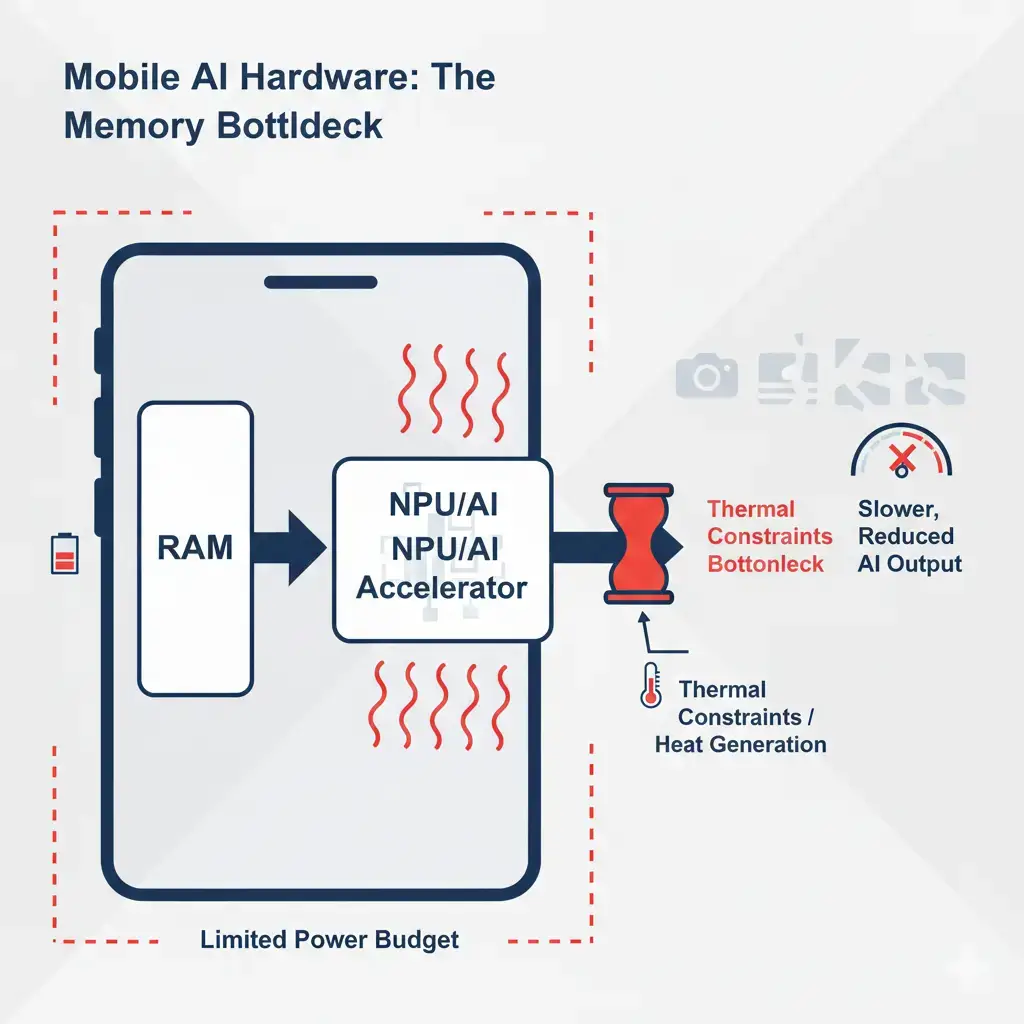

This comparison between Apple’s Neural Engine, Qualcomm’s Hexagon NPU, and MediaTek’s APU highlights the architectural tradeoffs shaping modern mobile AI. These dedicated accelerators define how efficiently smartphones run on-device machine learning workloads. The proliferation of artificial intelligence in consumer devices necessitates specialized hardware for efficient on-device machine learning inference. General-purpose CPUs and even GPUs often fall short in delivering the requisite performance per watt for real-time, privacy-preserving AI. This is particularly challenging within typical mobile power envelopes, which often provide only a few watts of sustained power. For edge devices, stringent power budgets and the need to keep data on-device for privacy and latency requirements further complicate matters, avoiding the overhead of cloud communication.

This demand drives the development of dedicated AI accelerators, often termed Neural Processing Units (NPUs) or AI Processing Units (APUs). This article delves into the technical distinctions between three prominent examples: Apple’s Neural Engine, Qualcomm’s Hexagon NPU, and MediaTek’s APU, examining their architectures, performance characteristics, and real-world impact.

What Each Architecture Does

Apple Neural Engine: Integrated within Apple’s A-series and M-series SoCs, the Neural Engine is a dedicated, multi-core hardware block designed for high-throughput execution of neural network operations. Its primary role is to accelerate a broad spectrum of AI tasks, from computational photography to on-device voice processing, ensuring seamless integration within Apple’s software ecosystem. This tight integration helps manage memory footprint and optimize data flow for larger models, crucial for maintaining low latency in interactive applications and enabling complex AI models to run efficiently within device memory constraints.

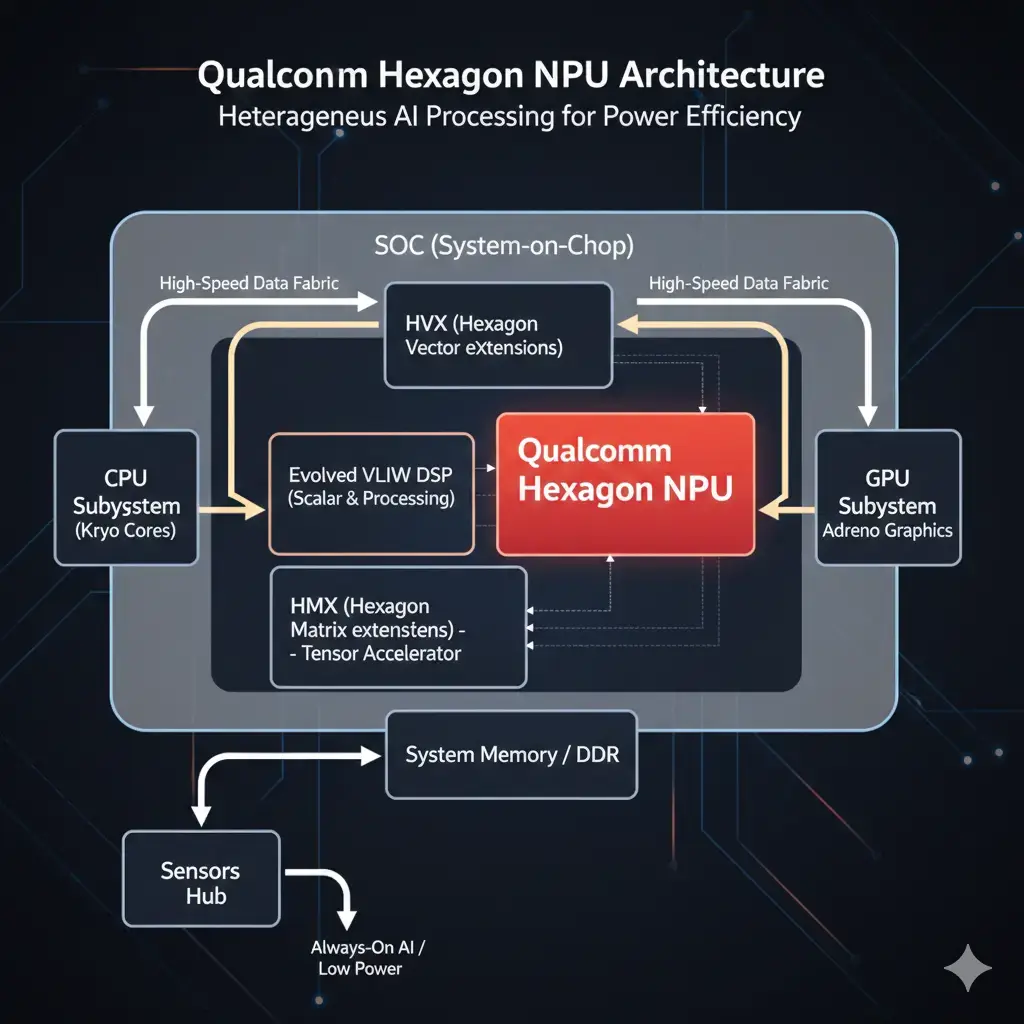

Qualcomm Hexagon NPU: Part of the broader Qualcomm AI Engine, the Hexagon NPU evolves Qualcomm’s Digital Signal Processor (DSP) architecture. It incorporates dedicated tensor and vector accelerators (HVX, HMX) to efficiently handle matrix multiplications and convolutions. Its design emphasizes heterogeneous computing, orchestrating AI workloads across the CPU, GPU, and Hexagon for optimal power efficiency, particularly for always-on features. This system tradeoff allows the device to allocate tasks to the most power-efficient core, extending battery life and reducing thermal load for sustained edge AI operations while ensuring a consistent user experience.

MediaTek APU: MediaTek’s AI Processing Unit is a dedicated hardware block found in its Dimensity and Helio SoCs. It features a multi-core design optimized for accelerating AI inference across various precision levels. The APU aims to deliver a strong balance of performance and power efficiency for a wide array of AI applications, targeting the high-end and premium mid-range Android smartphone market. This balance is critical for devices with varying power envelopes and memory capacities, where model sizes and computational demands can differ significantly across applications. Smartphone RAM ceilings, typically 6-16GB, directly influence the maximum model size that can be loaded and processed efficiently.

Neural Engine vs Hexagon NPU vs MediaTek APU: Architectural Overview

While all three accelerate AI inference, their underlying architectures and integration philosophies differ significantly. The Neural Engine is a purpose-built, tightly coupled accelerator. This tight coupling minimizes data movement overhead and reduces latency, a significant advantage for real-time on-device inference compared to offloading to the cloud.

The Hexagon NPU leverages an evolved DSP foundation with specialized extensions. The MediaTek APU is a dedicated multi-core block, often featuring a flexible design to adapt to various AI workloads. This highlights how the Neural Engine vs Hexagon NPU vs MediaTek APU comparison diverges in design philosophy and optimization priorities.

| Feature | Apple Neural Engine | Qualcomm Hexagon NPU | MediaTek APU |

|---|---|---|---|

| Core Architecture | Dedicated Tensor Cores | Evolved VLIW DSP + Tensor Accelerators | Multi-core Dedicated AI Processors |

| Integration | Tightly integrated (SoC) | Heterogeneous (CPU/GPU/DSP) | Dedicated block (SoC) |

| Primary Focus | General ML, Ecosystem | Power-efficient, DSP-heavy | Balanced Performance/Watt |

| Software Stack | Core ML, ML Compute | Neural Processing SDK | NeuroPilot AI Platform |

| Data Precision | FP32, FP16, INT8, INT4 | FP32, FP16, INT8, INT4 | FP32, FP16, INT8, INT4 |

Neural Engine vs Hexagon NPU vs MediaTek APU: Performance Comparison

Neural Engine vs Hexagon NPU vs MediaTek APU comparison architecture diagram. Direct performance comparisons are challenging due to proprietary benchmarks and varying software stacks. Furthermore, an NPU’s effective performance on an edge device involves not just raw throughput, but also efficient memory access and minimized latency for user-facing applications—a key system tradeoff. Nevertheless, general trends emerge.

Apple’s Neural Engine consistently delivers top-tier raw performance (TOPS) and efficiency within its ecosystem, benefiting from tight hardware-software co-design. It excels in sustained, complex AI tasks, where sustained performance is often more critical than peak benchmarks for real-world user experience.

Qualcomm’s Hexagon NPU prioritizes power efficiency for specific, often always-on, AI tasks. Its design is optimized to operate within stringent mobile power envelopes of a few watts, even for continuous operation. Its heterogeneous approach allows it to offload suitable workloads from the CPU/GPU, achieving excellent performance per watt for tasks like voice processing and certain camera enhancements.

MediaTek’s APU demonstrates significant generational improvements, often competing closely with Qualcomm in raw TOPS and efficiency for a broad range of AI applications. Achieving a good balance between peak and sustained performance is a key design goal for diverse Android workloads. It aims for a balanced performance profile, making it suitable for various AI features in Android devices, from gaming to photography.

This highlights how Neural Engine vs Hexagon NPU vs MediaTek APU diverge in design philosophy and optimization priorities.

Neural Engine vs Hexagon NPU vs MediaTek APU: Power & Thermal Behavior

Power efficiency is paramount for mobile AI accelerators. All three architectures employ techniques like low-precision arithmetic (INT8, INT4) to reduce computational complexity and memory footprint, thereby lowering power consumption.

Apple’s Neural Engine, tightly integrated, benefits from Apple’s comprehensive power management strategies across the entire SoC. While capable of high sustained performance, intense workloads can lead to thermal throttling in some iPhone models under extreme, prolonged AI tasks. Such throttling is a necessary mechanism to prevent overheating and maintain device longevity, though it limits sustained peak performance. Thermal limits on edge devices directly constrain maximum sustained computational capacity, influencing how large and complex models can run continuously without performance degradation. These limits are especially pronounced given the typical mobile power envelopes of a few watts sustained.

Qualcomm’s Hexagon NPU is designed with a strong emphasis on low power for “always-on” scenarios. Its DSP heritage makes it inherently efficient for signal processing tasks, and dedicated tensor accelerators further optimize for ML. This design helps minimize thermal output during typical AI usage.

MediaTek’s APU also focuses on power efficiency, with each generation improving its performance-per-watt metrics. Its multi-core design allows for flexible power scaling, enabling the APU to adapt to varying workload demands and manage thermal output effectively for sustained operation.

Memory & Bandwidth Handling

Efficient memory access and high bandwidth are critical for AI accelerators, as neural network models often involve large weights and activations (which can push smartphone RAM ceilings). These constraints are explored in detail in our guide on On-Device AI Memory Limits: Performance, Thermal, and Bandwidth Explained. All three architectures are designed to minimize memory bottlenecks.

Apple’s Neural Engine benefits from direct, high-bandwidth access to the unified memory architecture in M-series chips and optimized memory controllers in A-series. This tight coupling reduces latency and maximizes data throughput for complex models. Such high bandwidth is essential for quickly processing large intermediate tensors and model weights, preventing memory access from becoming a bottleneck for latency-sensitive AI tasks on the device. This is crucial given the memory bandwidth limitations inherent in mobile SoC designs.

Qualcomm’s Hexagon NPU leverages its DSP roots with optimized local memory and efficient data paths to the main system memory. Its heterogeneous design allows for intelligent data placement and movement, reducing overall memory bandwidth requirements for AI tasks. This is a critical system tradeoff to manage the finite memory bandwidth available on mobile platforms, especially when dealing with diverse model sizes and concurrent AI operations. Effective memory bandwidth utilization is key to avoiding bottlenecks that can degrade AI performance.

MediaTek’s APU integrates dedicated memory controllers and caching mechanisms to ensure efficient data flow. Its design often includes optimizations for common neural network memory access patterns, aiming to reduce latency and improve throughput for various model sizes. Efficient memory handling is paramount for edge devices, as limited on-chip memory and bandwidth can otherwise restrict the complexity and size of models that can be deployed effectively. Smartphone RAM ceilings, typically 6-16GB, impose strict limits on the total memory footprint of AI models and their intermediate data.

Software Ecosystem

The usability of an AI accelerator heavily depends on its software ecosystem.

Apple Neural Engine: Developers primarily interact with the Neural Engine through Core ML, Apple’s machine learning framework. Core ML automatically compiles and optimizes models for the Neural Engine. For lower-level control, developers can use ML Compute and Metal Performance Shaders, offering deep integration with Apple’s hardware.

Qualcomm Hexagon NPU: Qualcomm provides the Neural Processing SDK (SNPE) as part of the broader Qualcomm AI Engine, which allows developers to deploy trained models (from frameworks like TensorFlow, PyTorch, ONNX) onto the Hexagon NPU, GPU, or CPU. This SDK manages the system tradeoffs involved in distributing workloads across heterogeneous cores, optimizing for power and latency given the constraints of the device’s hardware. This is essential for operating within tight mobile power envelopes and thermal limits.

MediaTek APU: MediaTek offers the NeuroPilot AI platform, a comprehensive SDK that supports popular AI frameworks. NeuroPilot provides a unified API for developers to leverage the APU’s capabilities, including model conversion, optimization, and deployment across MediaTek-powered devices.

Real-World Deployment

These accelerators power a wide array of features in modern devices.

Apple Neural Engine: Drives critical features like Face ID, Animoji/Memoji, Deep Fusion and Photographic Styles in computational photography, on-device Siri processing, Live Text, Visual Look Up, and advanced ARKit applications. These applications demand low latency and high throughput, achievable on-device due to the Neural Engine’s dedicated hardware, avoiding the inherent latency and privacy concerns of cloud-based processing. For a broader comparison of local versus remote inference, see our detailed analysis of On-Device AI vs Cloud AI: What Really Powers Your Gadgets in 2026?. This allows for the deployment of larger, more sophisticated models that would be impractical to run in the cloud due to latency or data transfer costs.

Qualcomm Hexagon NPU: Enables always-on voice assistants (“Hey Google”), advanced camera features such as super-resolution zoom and semantic segmentation for portrait mode, AI-driven gaming optimizations, and on-device language translation. For ‘always-on’ features, the NPU’s power efficiency is critical, as continuous cloud communication would be impractical due to bandwidth and battery constraints on an edge device. Operating within mobile power envelopes of a few watts sustained is a fundamental design goal for such features.

MediaTek APU: Powers AI camera enhancements, including AI noise reduction, AI-HDR, AI-powered bokeh effects, and AI scene detection. It also contributes to AI-driven gaming optimizations, smart display features, and overall system efficiency. The ability to run these diverse AI models on-device ensures immediate responsiveness and user privacy, which the round-trip latency and data exposure of cloud inference would compromise. This on-device execution also helps manage model size versus device feasibility, as data does not need to be constantly streamed.

Which Design Is More Efficient

When evaluating Neural Engine vs Hexagon NPU vs MediaTek APU, efficiency depends heavily on workload type, power envelope, and ecosystem integration. Determining which design is “more efficient” is context-dependent.

Apple’s Neural Engine excels in overall system efficiency for its tightly integrated ecosystem. Its performance-per-watt is high for a broad range of complex, general-purpose ML tasks, benefiting from deep hardware-software co-optimization.

Qualcomm’s Hexagon NPU demonstrates superior efficiency for specific, often DSP-heavy or “always-on” AI workloads. Its heterogeneous approach allows for fine-grained power management, making it highly efficient for tasks that can be offloaded from more power-hungry components.

MediaTek’s APU offers a strong balance of performance and power efficiency, particularly for the diverse demands of the Android ecosystem. Its multi-core, flexible design allows it to scale efficiently across various AI applications, providing competitive performance-per-watt in its target market segments.

Ultimately, efficiency is a function of workload, power budget, and integration. This is particularly true within the tight mobile power envelopes of a few watts sustained. This highlights the system tradeoffs inherent in designing AI accelerators for edge devices, where every architectural choice impacts the balance between performance, power consumption, and the maximum model complexity that can be supported. This balance often involves optimizing for sustained performance rather than just peak theoretical throughput. Each architecture is highly efficient within its intended design philosophy and ecosystem.

Key Takeaways

Ultimately, the Neural Engine vs Hexagon NPU vs MediaTek APU comparison highlights how ecosystem strategy shapes AI hardware efficiency.

- Apple’s Neural Engine is a tightly integrated, high-performance accelerator optimized for its proprietary ecosystem and general ML tasks.

- Qualcomm’s Hexagon NPU leverages an evolved DSP with dedicated tensor cores, excelling in power-efficient, heterogeneous computing for always-on AI.

- MediaTek’s APU is a dedicated multi-core solution focused on balanced performance and efficiency for a wide range of Android AI applications.

- All three utilize low-precision arithmetic (INT8, INT4) and optimized memory access to maximize power efficiency. Ultimately, Neural Engine vs Hexagon NPU vs MediaTek APU highlights three distinct philosophies in mobile AI silicon design.

- Their respective software ecosystems (Core ML, SNPE, NeuroPilot) provide developers with tools to leverage these accelerators.

- Efficiency is workload-dependent, with each design demonstrating strengths in different AI application scenarios.