Table of Contents

What Is AI Scene Detection in Phone Cameras?

AI scene detection in phone cameras uses on-device neural networks to analyze subjects, lighting, and composition in real time. The system automatically adjusts exposure, white balance, HDR, focus, and sharpening based on recognized scenes like portraits, food, night, or landscapes, enabling better photos without manual control.

Quick Facts About AI Scene Detection

- What it is: An AI system in smartphones that identifies scenes like portrait, food, night, pets, and landscapes in real time.

- Where it runs: On-device AI hardware such as NPUs, Neural Engines, and AI-enabled ISPs.

- Main purpose: Automatically optimize exposure, white balance, HDR, focus, and color processing.

- Core technologies: Convolutional Neural Networks (CNNs), semantic segmentation, multi-frame fusion, and AI tone mapping.

- Performance speed: Scene analysis often runs at 50–200 frames per second during camera preview.

- User benefit: Delivers better photos instantly without needing manual camera settings.

- Why it matters: Enables computational photography features like Night Mode, Portrait Mode, HDR fusion, and AI zoom.

Introduction

AI scene detection in phone cameras has become a core part of modern smartphone photography. Instead of relying only on autofocus and exposure adjustments, cameras now analyze the scene using artificial intelligence before and during capture. This allows the system to understand subjects, lighting, and environments, then automatically optimize settings and apply specialized image processing. The result is consistently better photos with minimal manual effort, turning complex photographic decisions into a seamless point-and-shoot experience.

Core Concept

AI scene detection in phone cameras is an automated process. The camera system analyzes visual content, either from a real-time preview feed or a captured frame, to identify the environment, subject, or prevailing lighting conditions. Based on this identification, the system automatically adjusts photographic settings and applies specific image processing algorithms. This intelligent analysis and optimization aim to produce an image tailored for the specific scene, ensuring optimal brightness, color accuracy, sharpness, and dynamic range. The effectiveness of AI scene detection in phone cameras depends on fast on-device processing and accurate classification models.

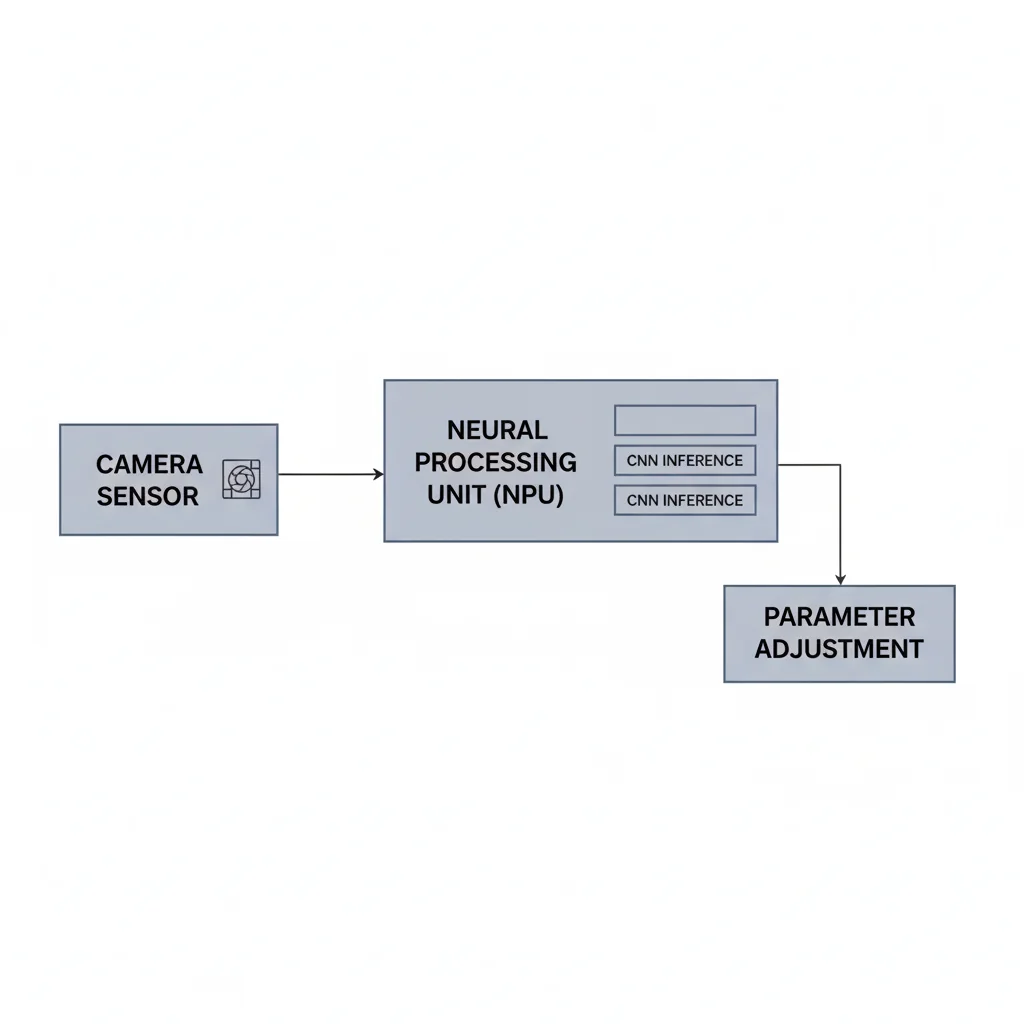

How It Works

The process of scene detection in a phone camera involves a series of sequential steps, beginning the moment the camera is activated.

- Image Acquisition: The camera sensor continuously captures raw visual data from the scene, forming the input for subsequent analysis.

- Preprocessing: Before detailed analysis, this raw image data undergoes initial preprocessing. This stage typically includes fundamental adjustments like noise reduction, basic color correction, and preliminary dynamic range adjustments.

- Feature Extraction: Specialized algorithms then analyze the preprocessed image to identify distinct visual features—critical cues that help define the scene. Examples include:

- Color histograms: Analyzing color distribution to identify dominant hues (e.g., vast blues for sky, greens for foliage).

- Texture patterns: Recognizing repetitive or unique surface characteristics (e.g., rough bark, smooth skin, intricate fabric).

- Edge detection: Identifying boundaries and outlines of objects within the frame.

- Object shapes: Recognizing common geometric or organic forms.

- Lighting conditions: Assessing brightness levels, contrast ratios, and the presence of backlighting.

- Element distribution: Analyzing how different visual components are arranged across the frame.

- Classification: The extracted features are fed into a classification model. This model compares them against a vast database of pre-labeled scene types (e.g., “landscape,” “portrait,” “food,” “night,” “pet,” or “text”), determining the most probable scene type based on the closest match.

- Parameter Adjustment: Once a scene type is classified, the camera system automatically adjusts various photographic parameters specific to the detected scene to optimize the final image:

- Exposure: Modifying shutter speed, ISO sensitivity, and sometimes aperture.

- White Balance: Calibrating color temperature for accurate white rendering under different light sources.

- Focus Prioritization: Directing autofocus to specific subjects like faces or animals.

- Post-processing Enhancements: Applying targeted algorithms such as saturation boosts for food or landscapes, skin smoothing for portraits, sharpening for text, or activating High Dynamic Range (HDR) for high-contrast scenes.

Role of AI

Artificial Intelligence, particularly machine learning and deep learning, is central to the sophisticated scene detection capabilities in modern phone cameras. While traditional computer vision could perform some feature extraction, AI significantly elevates the accuracy, speed, and versatility of scene recognition.Without deep learning, AI scene detection in phone cameras would not achieve real-time accuracy.

- Machine Learning Foundation: AI algorithms are trained to recognize complex patterns in visual data indicative of specific scenes. This training involves feeding algorithms millions of labeled images, enabling them to learn intricate relationships between visual features and scene categories.

- Convolutional Neural Networks (CNNs): CNNs are the predominant deep learning architecture for image recognition in scene detection. Convolutional Neural Networks (CNNs) are widely used in mobile vision tasks, as also discussed in TensorFlow Lite documentation. These networks excel at automatically learning hierarchical features from raw pixel data, from simple edges and textures to complex object parts and full scene compositions.

- Training and Inference:

- Training: During training, a CNN is exposed to massive datasets of meticulously labeled images (e.g., “beach,” “mountain,” “dog,” “person”). The network adjusts its internal parameters to minimize classification errors.

- Inference: Once trained, the CNN performs inference. When a user points their phone camera, the live preview or a captured frame is rapidly fed into the trained CNN, often on dedicated AI hardware. The CNN then outputs a probability distribution across its known scene categories, indicating the likelihood of the scene being a “landscape” (e.g., 90%), a “sky” (e.g., 8%), or another category.

- Dedicated Hardware: To handle the computational demands of real-time AI inference, most modern smartphones incorporate dedicated AI hardware. This is part of a broader shift toward on-device AI, where processing happens locally instead of in the cloud, improving speed, privacy, and efficiency. These include Neural Processing Units (NPUs) or custom System-on-a-Chip (SoC) components. These specialized processors are optimized for the parallel computation required by neural networks, enabling rapid processing (often 50–200 FPS) with greater power efficiency than general-purpose CPUs or GPUs. INT8 quantization is commonly used to further optimize on-device neural processing.

- Advanced AI Techniques:

- Semantic Segmentation: AI isolates foreground subjects from backgrounds, enabling precise background blur or targeted enhancements.

- Multi-frame Fusion: AI algorithms combine multiple exposures, using motion estimation to align frames and reduce noise, particularly in low light.

- AI Tone Mapping: Replaces fixed High Dynamic Range (HDR) curves with intelligent, scene-adaptive tone mapping for more natural results.

- Super-resolution Networks: Enhance detail in zoomed images by intelligently reconstructing missing pixel information.

Key Capabilities

AI scene detection underpins a wide array of advanced photographic features in modern smartphones, moving beyond basic adjustments to offer sophisticated image optimization.

- Automatic Setting Optimization:

- Adjusts exposure (shutter speed, ISO, aperture), white balance, and focus prioritization based on the detected scene.

- Dynamically manages dynamic range by activating HDR for high-contrast scenarios.

- Subject-Specific Enhancements:

- Applies sharpening for text to ensure readability.

- Softens skin tones in portraits for a more flattering look.

- Boosts colors for food and landscapes to make them more vibrant.

- Intelligent Focus:

- Automatically detects and prioritizes focus on faces, pets, or specific objects.

- Specialized Mode Activation:

- Automatically suggests or switches to specialized camera modes like Night Mode for low-light conditions or Macro Mode for close-up shots.

- Brand-Specific Implementations:

- Google Pixel:

- Real-time semantic segmentation during preview (Pixel 8).

- Multi-frame fusion with AI motion alignment for Night Sight.

- CNN-based denoising for low-light clarity.

- Real Tone uses a 3475-skin-tone dataset for accurate complexion rendering.

- Photo Unblur applies deconvolution neural networks for motion blur correction.

- Apple iPhone:

- Smart HDR 5 uses AI multi-exposure fusion with semantic segmentation, processed by the A-series Neural Engine in under 100ms.

- Deep Fusion performs pixel-level fusion of up to 9 images for enhanced detail in medium-light.

- Portrait Mode utilizes monocular depth estimation, supplemented by LiDAR on Pro models, and employs edge-aware bokeh with neural relighting and generative depth refinement.

- Samsung Galaxy:

- Scene Optimizer detects over 30 scene categories (e.g., Food, People, Flowers, Sky, Text) using MobileNet-based models.

- Night Mode uses multi-frame stacking with AI noise reduction.

- Space Zoom employs AI super-resolution for long-distance shots.Similar machine learning techniques are also used in AI-powered earbuds, where neural models process sound in real time for noise cancellation and voice isolation.

- Director’s View uses real-time object segmentation for multi-camera video.

- Other Manufacturers:

- Xiaomi 15 Ultra uses AI periscope zoom with diffusion-based upscaling.

- OnePlus 13 AI Erase utilizes inpainting Generative Adversarial Networks (GANs) for object removal.

- Vivo X200 Pro incorporates AI-controlled variable aperture (f/1.4–f/4.0) and uses LOFIC sensors to assist AI HDR stacking.

- Google Pixel:

Real-World Usage

AI scene detection significantly enhances various common photography scenarios, simplifying the process and improving outcomes for a broad range of users.

- Everyday Photography: Simplifies the “point-and-shoot” experience by eliminating the need for manual setting adjustments, ensuring consistent quality across diverse daily situations without user intervention.

- Travel Photography: Handles extreme contrast, moving clouds, and distant detail without manual exposure control.

- Food Photography: Automatically detects food items and applies enhancements like increased saturation, improved contrast, and sharpened textures to make dishes appear more appetizing.

- Pet Photography: Recognizes animals, adjusting focus and exposure to clearly capture fast-moving subjects. It enhances fur textures and ensures accurate color rendition of pets.

- Portrait Photography: Identifies human faces, applying subtle skin-smoothing algorithms and simulating professional-grade background blur (bokeh) to make subjects stand out. It also ensures accurate complexion rendering across diverse skin tones.

- Low-Light Photography: Automatically activates specialized night modes, reduces image noise, and preserves crucial details in dimly lit environments. It combines multiple frames to brighten scenes and improve clarity.

Limitations & Engineering Trade-offs

Despite its sophistication, AI scene detection in phone cameras presents several limitations and involves inherent engineering trade-offs.

- Misclassification: The system can sometimes misinterpret a scene, leading to suboptimal settings (e.g., classifying a sunset as a regular landscape, resulting in muted colors). Accuracy drops in extreme lighting, motion blur, or truly unique, unseen scenes.

- Over-processing: Aggressive AI enhancement can lead to unnatural-looking images, such as oversaturated colors, excessively smoothed skin that loses detail, or artificial sharpness that introduces halos or artifacts.

- Computational Overhead & Battery Life: Real-time AI inference, especially during live preview, demands significant processing power. AI preview processing can increase power draw by 10–30%. This computational load can impact battery life, particularly during extended shooting sessions or video recording. Engineers mitigate this through frame skipping and using low-resolution previews for analysis.

- Reduced User Control: For advanced users who prefer precise manual adjustments, the automated nature of AI scene detection can feel restrictive, overriding their desired creative control.

- Training Data Bias: The accuracy and performance of AI models heavily depend on the diversity and quality of their training data. Bias in this data can lead to poorer performance in specific or unusual scenarios, or for certain demographics (e.g., less accurate skin tone rendering for underrepresented groups).

- Uncertainty in Complex Scenes: Scenes with multiple, ambiguous elements or highly complex lighting conditions can challenge the AI, leading to less confident classifications and potentially less optimal adjustments.

Who Benefits Most

AI scene detection primarily benefits users who prioritize convenience and consistent, high-quality results without needing to understand complex photographic principles.

- Casual Photographers: Individuals who simply want to “point and shoot” and get a good photo every time, without delving into manual settings like ISO, shutter speed, or white balance.

- Everyday Users: People capturing daily moments, family events, or quick snapshots where speed and ease of use are paramount.

- Travelers: Those who encounter a wide variety of scenes and lighting conditions and need their camera to adapt quickly and effectively without constant manual adjustments.

- Social Media Enthusiasts: Users who want visually appealing photos ready for sharing directly from their phone, often benefiting from the automatic enhancements applied by AI.

- Beginner Photographers: Individuals learning about photography who can rely on the AI to handle technical aspects while they focus on composition and framing.

Industry Direction

The integration of AI into phone cameras is a defining trend, with manufacturers continually pushing the boundaries of what’s possible through dedicated hardware and sophisticated algorithms. The same on-device intelligence driving phone cameras is also expanding into AI wearables, bringing real-time sensing and adaptation to devices beyond smartphones. Future improvements in AI scene detection in phone cameras will rely on better NPUs and smarter computational photography models.

- https://www.qualcomm.com/products/snapdragonDedicated AI Hardware: Most major smartphone manufacturers now integrate AI-powered scene detection, leveraging dedicated AI hardware like Neural Processing Units (NPUs) or custom chips (e.g., Apple’s Neural Engine, Google’s Tensor G3, Qualcomm’s Snapdragon NPUs, Samsung’s Exynos NPUs). AI ISPs (Image Signal Processors) further offload CPU/GPU tasks, reducing power usage by up to 5x. Mobile AI acceleration relies on NPUs, similar to architectures described by Qualcomm Snapdragon platforms.

- Advanced Computational Photography: The industry is moving towards more granular and intelligent image processing, including: Semantic Segmentation, Multi-frame Fusion, AI Super-resolution, Generative AI for Editing.

- Sensor-AI Synergy: Innovations like Vivo X200 Pro’s AI-controlled variable aperture (f/1.4–f/4.0) and the use of LOFIC sensors to assist AI HDR stacking show a trend where sensor technology and AI work in tandem for superior image capture. Multi-frame HDR processing is a core concept in computational photography research.

- Software Frameworks: Mobile AI runtimes like TensorFlow Lite and NNAPI are common, facilitating efficient on-device execution of complex neural network models.

- Expanding Scene Recognition: Phones commonly recognize 20–30 scene types on average, with this range expected to expand, covering more niche scenarios and improving accuracy in complex or ambiguous settings.

Frequently Asked Questions (FAQ)

What is AI scene detection in phone cameras?

AI scene detection in phone cameras is an on-device artificial intelligence system that analyzes the scene in real time. It identifies subjects, lighting conditions, and environments, then automatically adjusts exposure, HDR, focus, and color settings to improve photo quality.

Does AI scene detection work without the internet?

Yes. AI scene detection runs directly on the phone using dedicated hardware like a Neural Processing Unit (NPU) or Neural Engine. Because processing happens on-device, it works offline and responds instantly during camera preview.

How does AI know whether a scene is food, portrait, or night?

Yes. When the system detects low light, it can automatically activate Night Mode, use multi-frame fusion, reduce noise, and adjust exposure. This helps produce brighter and clearer images without manual camera adjustments.

Can AI scene detection make photos look unnatural?

It can. Over-processing may lead to overly saturated colors, excessive skin smoothing, or artificial sharpness. Manufacturers balance image enhancement with natural appearance to avoid these issues.

Is AI scene detection the same as HDR?

No. HDR is one of the tools AI can activate. Scene detection first identifies the environment, then decides whether HDR, night processing, or other enhancements should be applied.

Which smartphones use AI scene detection?

Most modern smartphones use it, including Google Pixel, Apple iPhone, Samsung Galaxy, Xiaomi, Vivo, and OnePlus devices. Each brand implements it using its own AI hardware and computational photography algorithms.

Conclusion

AI scene detection in phone cameras has become a core pillar of computational photography. AI scene detection has fundamentally reshaped smartphone photography, transforming devices into intelligent imaging companions. By automating the complex interplay of photographic settings and applying sophisticated post-processing, it empowers users to consistently capture optimized images across diverse scenarios. While challenges like misclassification and computational overhead persist, continuous advancements in dedicated AI hardware, sophisticated deep learning models, and innovative computational photography techniques are steadily refining its capabilities. As AI becomes even more deeply integrated with sensor technology and image processing pipelines, the future promises even more intuitive, accurate, and creatively powerful mobile photography experiences, further blurring the lines between amateur and professional results.

2 thoughts on “AI Scene Detection in Phone Cameras: How It Works”