Table of Contents

5nm vs 3nm AI workloads reveal a major shift in semiconductor efficiency and on-device AI performance. While 5nm chips enabled advanced neural processing in modern smartphones, 3nm technology delivers higher transistor density, lower power consumption per operation, and improved sustained AI performance.

The transition from 5nm to 3nm process nodes significantly boosts AI workload efficiency through higher transistor density. This enables larger, more complex Neural Processing Units (NPUs) and increased on-chip cache sizes within the same die area, directly impacting the feasibility of running larger AI models locally. Crucially, 3nm technology offers superior power efficiency per transistor. This delivers higher sustained performance for AI tasks, reduces thermal throttling, and improves battery life in mobile and edge devices. Such efficiency is vital for maintaining performance within typical mobile power envelopes of a few watts sustained. This advancement is essential for deploying sophisticated generative AI and real-time multi-modal models directly on-device, where physical constraints of power and thermal envelopes directly limit the size and complexity of models that can be executed locally.

The debate around 5nm vs 3nm AI workloads ultimately centers on efficiency per watt and sustained performance. As AI models grow more complex, semiconductor scaling directly determines how many parameters can run locally without overheating or draining battery life.

Moving to 3nm manufacturing enables higher transistor density, improved switching efficiency, and better thermal behavior within the same silicon footprint. For modern System-on-Chips (SoCs), this translates into stronger AI acceleration, larger caches, and higher sustained throughput — all critical for generative AI and real-time multi-modal processing on edge devices.

In short, 3nm chips process more AI operations per watt, sustain peak performance longer, and enable larger on-device models compared to 5nm designs.

What Each 5nm and 3nm Architecture Does for AI Workloads

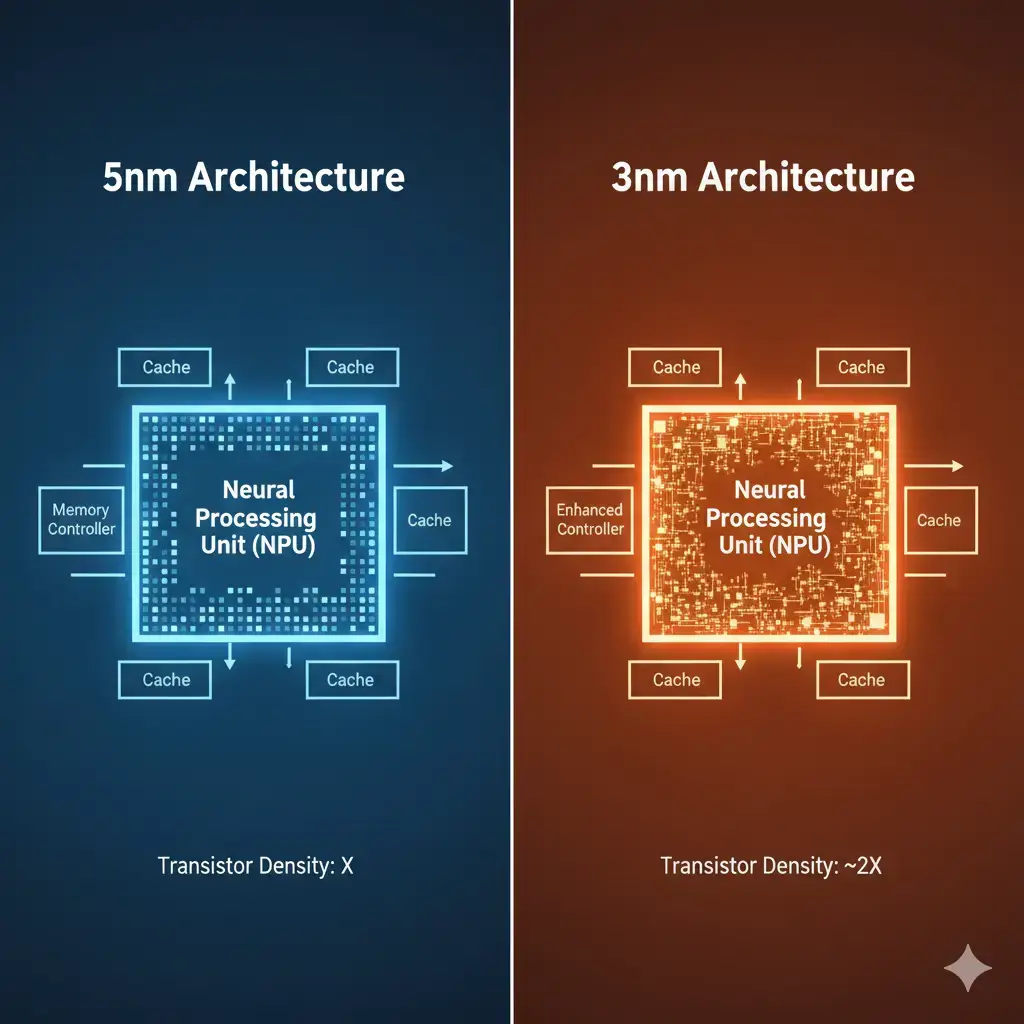

5nm Architecture: Chipsets built on 5nm process technology integrate dedicated AI accelerators, such as Neural Processing Units (NPUs), Digital Signal Processors (DSPs), and specialized GPU cores. These architectures efficiently process current-generation AI tasks like image recognition, natural language processing, and basic on-device inference. However, inherent hardware limits of this process node often necessitate model quantization or architectural pruning to fit within available memory and compute resources for larger AI models. Smartphone RAM ceilings, typically 8-16GB, frequently dictate the maximum model size that can be loaded. These architectures establish a robust foundation for many contemporary AI applications, balancing performance with power consumption.

3nm Architecture: The 3nm process node enables a new generation of SoCs featuring substantially enhanced AI capabilities. It facilitates the integration of larger, more complex NPUs with increased parallel processing units and deeper pipelines. This allows for the deployment of larger, more parameter-rich models directly on-device, reducing reliance on cloud inference for many common tasks and improving user experience through lower latency. Cloud AI, however, continues to offer significantly greater scale for the most demanding models. This architecture addresses the escalating demands of advanced AI, including large language models (LLMs), generative AI, and real-time multi-modal processing, delivering higher peak and sustained performance within stringent power envelopes, which typically restrict sustained power consumption to a few watts.

What Changes From 5nm to 3nm for AI Chips?

Modern 5nm and 3nm chips integrate dedicated AI accelerators such as Neural Processing Units (NPUs), which handle tensor operations more efficiently than CPUs or GPUs. If you’re unfamiliar with how these accelerators work, this detailed breakdown of smartphone NPUs explains their architecture and role in on-device AI. The move from 5nm to 3nm fundamentally centers on transistor scaling and its direct benefits to AI SoC design. 3nm processes offer a significant increase in transistor density, enabling designers to pack more specialized AI compute units or larger caches into the same silicon area. This directly influences the maximum model size that can be efficiently processed on-device.

According to TSMC’s official documentation on its 3nm process technology, the node delivers significantly higher transistor density and improved power efficiency compared to 5nm. The ability to integrate more specialized compute units within a constrained die area represents a crucial system tradeoff, balancing general-purpose processing with dedicated AI acceleration to meet specific performance targets. This density improvement also yields inherent power efficiency gains due to reduced capacitance and leakage currents.

5nm vs 3nm: Quick Comparison Table

| Feature | 5nm Process Node (e.g., TSMC N5/N4) | 3nm Process Node (e.g., TSMC N3B/N3E) |

|---|---|---|

| Transistor Density | High | ~60% Higher |

| NPU Complexity | Advanced, dedicated units | Larger, more parallel, specialized |

| On-Chip Cache | Substantial | Larger, lower latency |

| Power Efficiency | Good | Superior (lower leakage, faster switch) |

| Memory Controller | High-bandwidth LPDDR5/5X | Higher bandwidth LPDDR5X/LPDDR6 ready |

| Generative AI Capability | Limited | Strong |

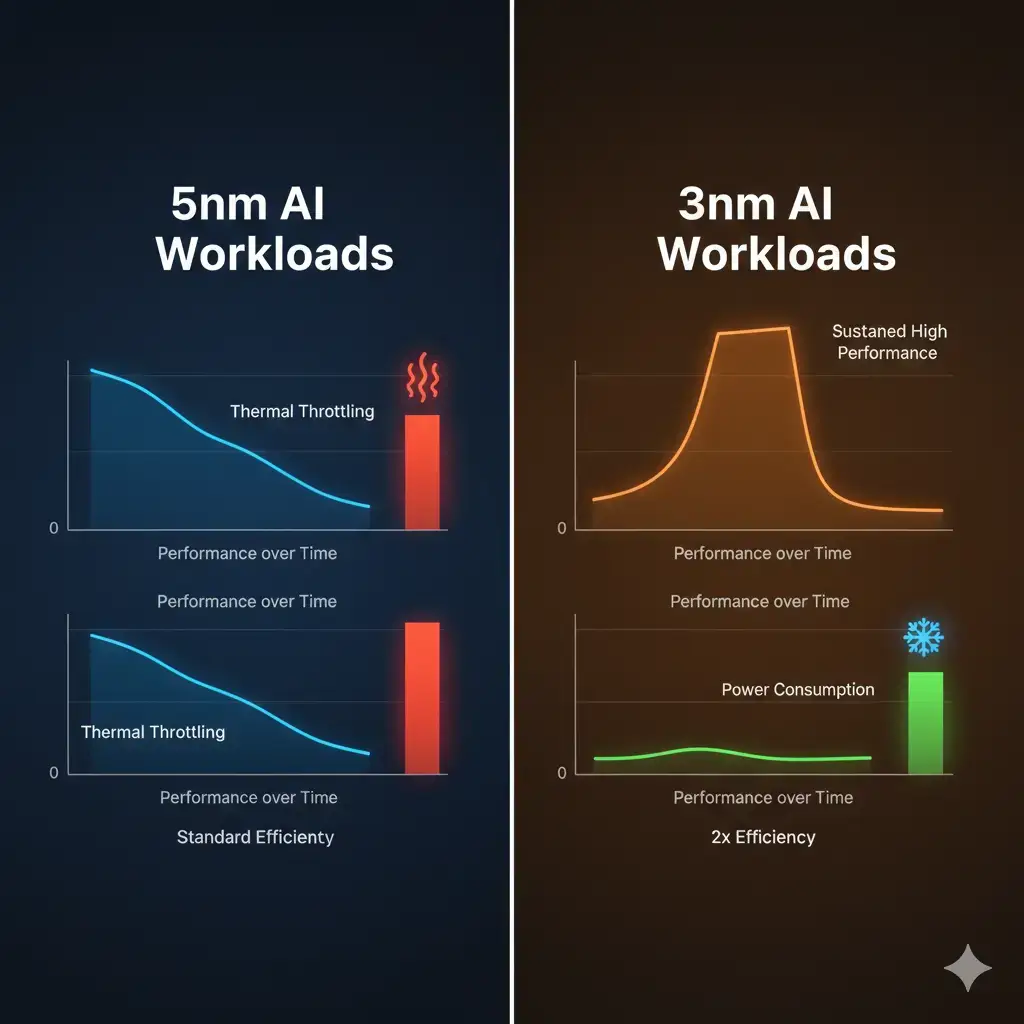

When comparing 5nm vs 3nm AI workloads, the differences become most visible under sustained performance and generative AI tasks.

5nm vs 3nm AI Workload Performance Comparison

3nm chipsets generally deliver higher peak performance for AI workloads compared to their 5nm predecessors. This results from integrating more AI processing units (e.g., NPU cores, tensor accelerators) and operating them at higher clock frequencies. Peak performance, however, is often limited by thermal design power (TDP) for short bursts.

More critically, 3nm’s superior power efficiency enables these chipsets to maintain a higher percentage of their peak performance for extended durations, reducing the likelihood of thermal throttling impacting long-running tasks. This sustained performance is particularly critical for real-time applications where consistent, low-latency responses are paramount, as intermittent drops in processing capability can degrade user experience or system responsiveness. This translates directly to superior sustained performance, vital for real-world AI applications that run continuously or involve large, iterative computations, such as generative AI model inference.

Power Efficiency & Thermal Behavior

The inherent power efficiency of 3nm transistors profoundly impacts AI workloads. Each operation consumes less energy, resulting in a lower overall power draw for the SoC during AI task execution. This helps keep the SoC within the typical mobile power envelope of a few watts. This reduced power consumption directly translates to less heat generation.

Managing heat dissipation is a fundamental hardware limit for any high-performance chip. Improved power efficiency directly expands the thermal budget, allowing for more intensive AI workloads without compromising device reliability or form factor. Consequently, 3nm chipsets sustain higher performance levels for longer periods before encountering thermal throttling limits, which can significantly reduce performance to prevent overheating. This improved thermal behavior is crucial for mobile and edge devices, enabling more complex AI features without compromising battery life or device comfort.

Memory Bandwidth & On-Device Model Size Limits

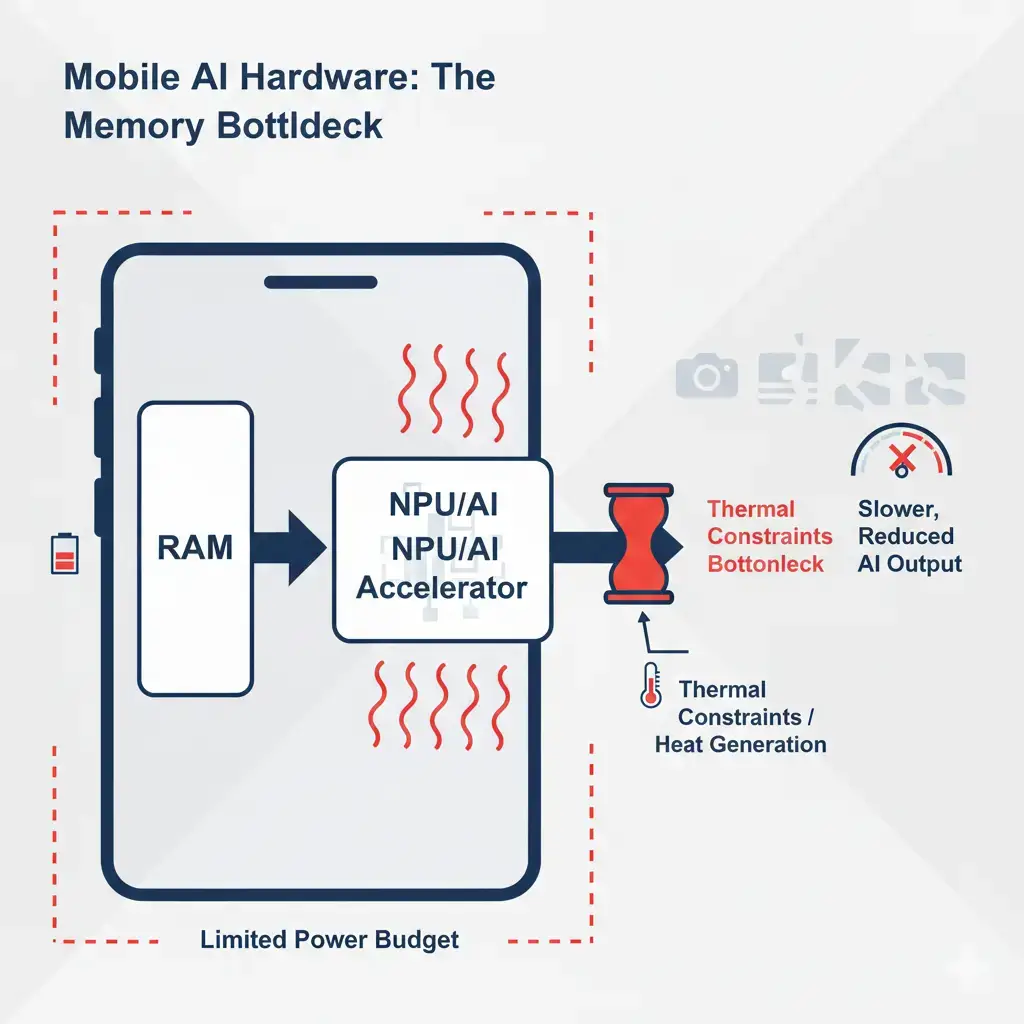

AI workloads are often memory-bound, particularly when processing large models or high-resolution data. The capacity and speed of memory access frequently represent primary hardware limits for deploying large AI models, as model parameters and intermediate activations must be rapidly moved between storage and compute units. Smartphone RAM ceilings and memory bandwidth are critical determinants of maximum model size and inference speed.

Even with 3nm improvements, AI workloads remain heavily memory-bound, and smartphone RAM ceilings still limit how large a model can run locally. As explained in our analysis of AI memory bottlenecks on mobile devices, bandwidth and cache design often determine real-world inference speed more than raw TOPS numbers. The 3nm process node enables integration of more advanced memory controllers, supporting newer, higher-bandwidth LPDDR standards (e.g., LPDDR5X, LPDDR6). This increased bandwidth mitigates bottlenecks in data transfer between main memory (DRAM) and AI accelerators. Insufficient bandwidth can cause compute units to idle, directly impacting overall system throughput and increasing inference latency—a common bottleneck, especially for large language models. Furthermore, higher transistor density enables larger and faster on-chip caches (L1, L2, L3, and system-level caches), significantly reducing data access latency and improving compute unit utilization.

Software Optimization for 5nm vs 3nm AI Workloads

The effectiveness of 3nm hardware for AI hinges on the software ecosystem. Frameworks like Core ML, TensorRT, and NNAPI, along with model formats like ONNX, require optimization to leverage underlying silicon advancements. Compilers and runtimes are critical for translating high-level AI models into efficient, hardware-specific instructions.

Optimizing the software stack is a critical system tradeoff, ensuring that theoretical gains from advanced hardware translate into practical improvements for diverse AI models, especially when considering the varied memory and compute demands of different model architectures. This includes managing memory bandwidth and fitting models within available RAM. Strong hardware-software coupling, as seen with Apple’s Neural Engine and Core ML, or NVIDIA’s Tensor Cores and TensorRT, enables maximum exploitation of 3nm’s capabilities, ensuring raw compute power translates into tangible performance gains for AI applications.

Real Device Examples

The benefits of 3nm for AI are already visible in premium consumer devices. Chipsets like Apple’s A17 Pro and M3, built on a 3nm process, showcase stronger on-device generative AI, advanced computational photography, and real-time multi-modal processing. These improvements stem from higher transistor density and improved energy efficiency at the silicon level.

This on-device capability directly addresses edge constraints by enabling AI processing closer to the data source, vital for applications requiring immediate responses or operating in environments with limited or no network connectivity. Furthermore, processing data locally enhances privacy by minimizing data transfer to external servers, a key consideration for sensitive applications. Future flagship SoCs from Qualcomm, MediaTek, Google, and Samsung are also set to adopt 3nm, promising similar advancements across a wider range of devices. This enables more sophisticated AI features to run locally, enhancing privacy, reducing latency, and decreasing reliance on cloud infrastructure for many common applications, though cloud AI still offers unparalleled scale for the most demanding tasks, all while adhering to strict mobile power envelopes.

5nm vs 3nm AI Workloads: Which Is More Efficient?

In real-world testing, 5nm vs 3nm AI workloads show clear divergence during long-running inference sessions. For AI workloads, the 3nm design offers superior efficiency compared to 5nm. This superior efficiency stems from its higher transistor density, enabling more powerful and specialized AI accelerators and inherent power savings per transistor. This holistic improvement directly addresses fundamental hardware limits of previous generations, enabling a wider range of AI models to be deployed efficiently at the edge, balancing computational demands with power and thermal envelopes. This allows for larger model sizes to be run within typical mobile power envelopes of a few watts. The combination of increased compute capability, lower power consumption, larger caches, and higher memory bandwidth means 3nm chipsets process more AI operations per watt, sustain peak performance longer, and manage more complex models with greater responsiveness. This significantly reduces the impact of thermal throttling on user experience.

Key Takeaways

- When evaluating 5nm vs 3nm AI workloads, 3nm clearly delivers superior efficiency and sustained performance.

- Superior power efficiency at 3nm translates directly to higher sustained AI performance and reduced thermal throttling for demanding workloads, addressing fundamental hardware limits related to thermal design power. This is vital for maintaining performance within mobile power envelopes of a few watts.

- Enhanced memory controllers and larger on-chip caches on 3nm mitigate memory bottlenecks, critical for AI data handling and enabling larger model sizes to reside closer to compute. Smartphone RAM ceilings, however, still impose a practical limit on the largest models.

- 3nm chipsets are essential for efficiently deploying complex generative AI and multi-modal models directly on-device, reducing latency and reliance on cloud infrastructure for many edge applications, though cloud AI still provides greater scale for the most demanding tasks.

- Optimized software frameworks, compilers, and runtimes are crucial to fully leverage 3nm’s hardware advantages for optimal AI performance, representing a critical system tradeoff to bridge hardware capabilities with model requirements.