Trending Posts

- All Posts

- AI in Devices

- Back

February 21, 2026/

No Comments

Modern AI image processing on System-on-Chips (SoCs) relies on tight integration between the Image Signal Processor (ISP) and Neural Processing...

February 19, 2026/

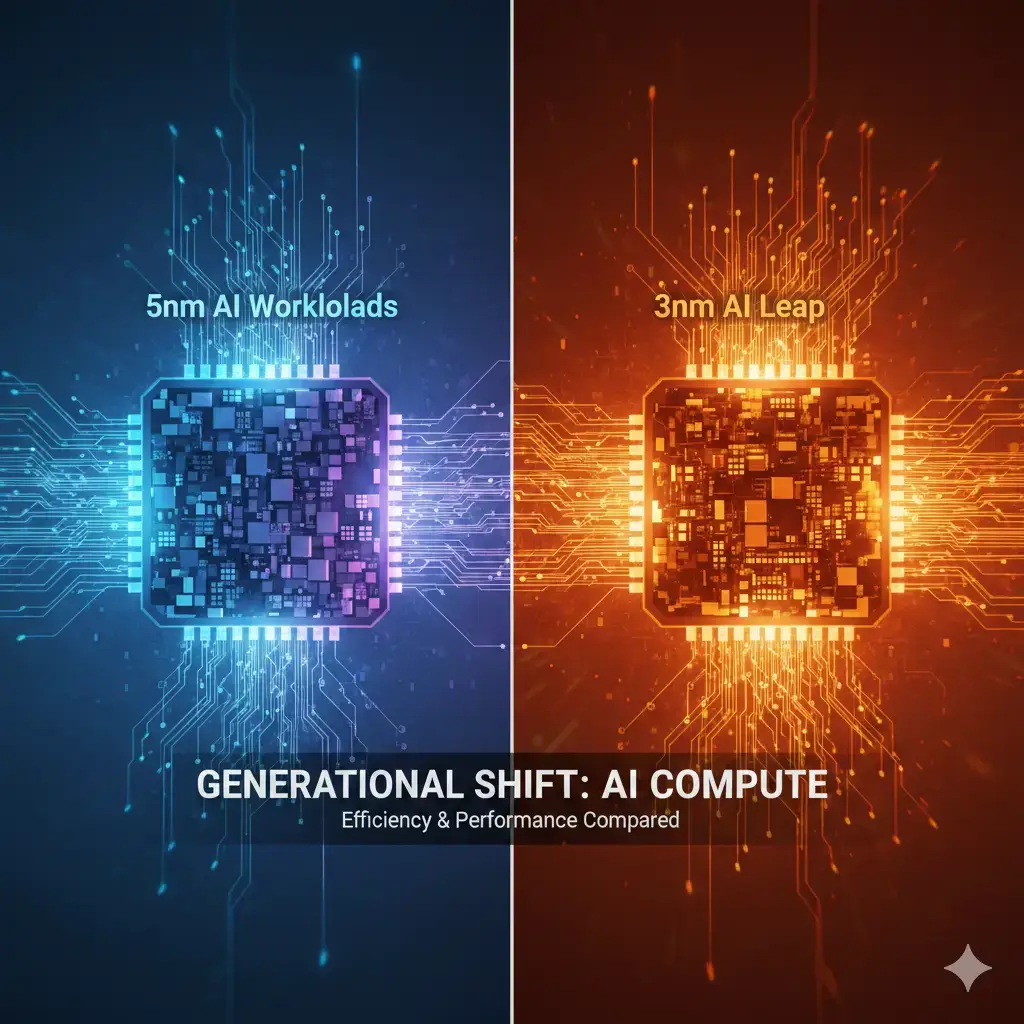

The shift from 5nm to 3nm process nodes improves AI performance-per-watt and compute density, but the real story lies in...

February 17, 2026/

A deep technical comparison of Apple Neural Engine, Qualcomm Hexagon NPU, and MediaTek APU—covering architecture, performance, power efficiency, and real-world...

February 8, 2026/

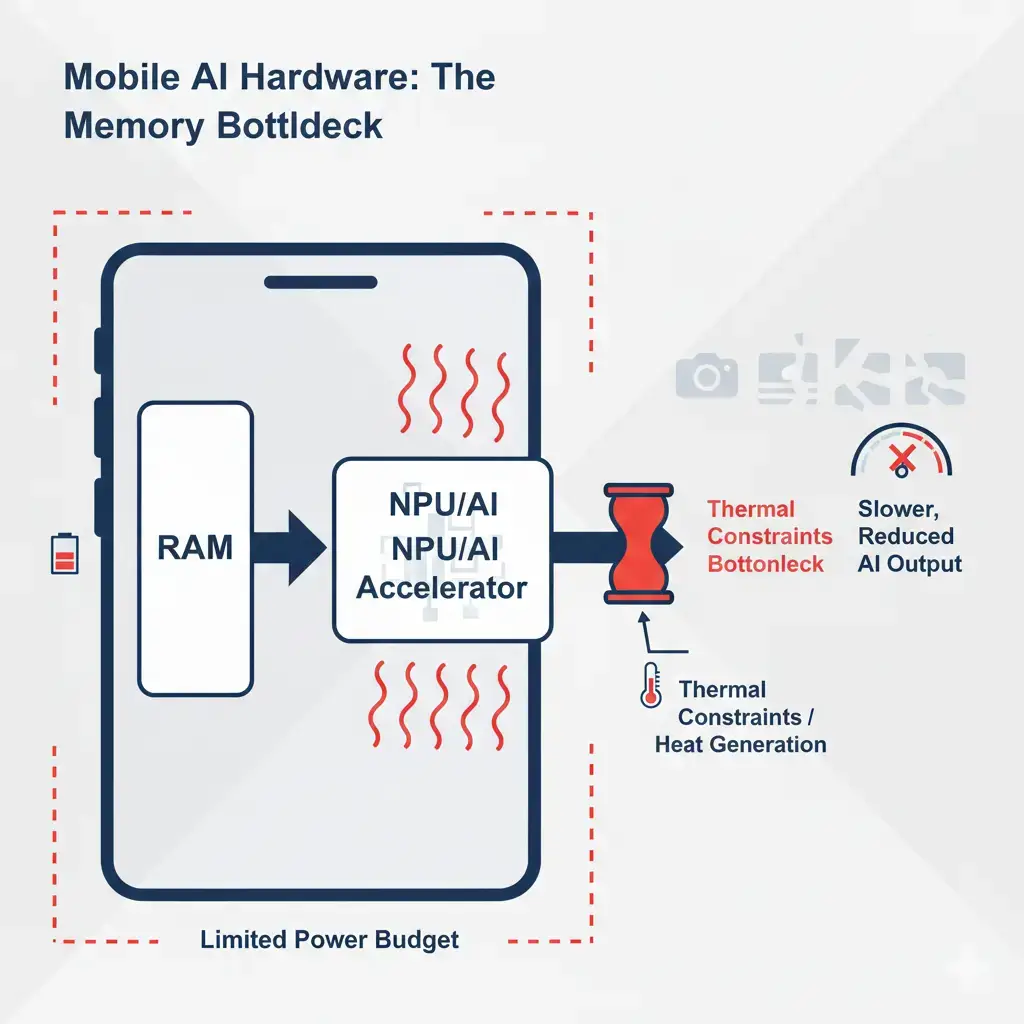

On-device AI performance is frequently constrained by memory bandwidth and capacity rather than raw compute power. These on-device AI memory...

February 8, 2026/

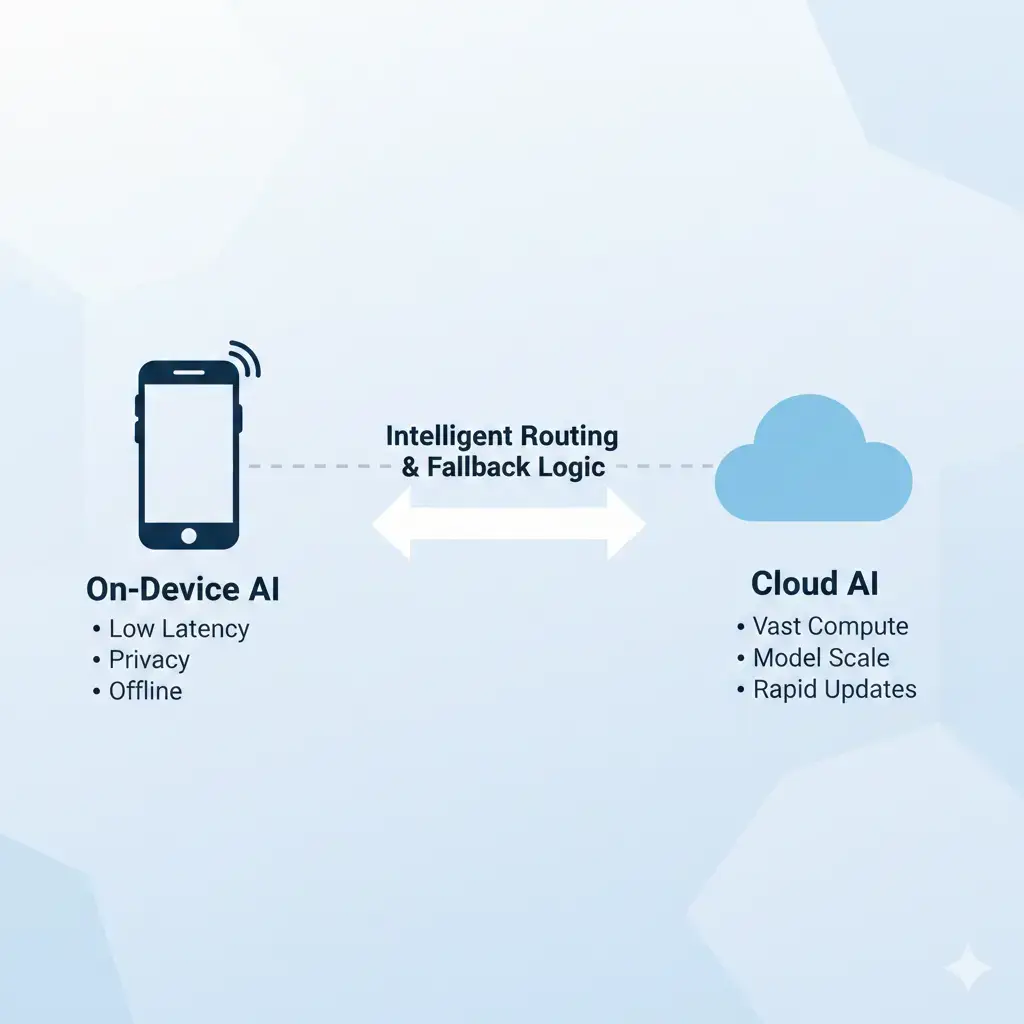

On-device AI operates within strict power, thermal, and memory limits. Cloud fallback is a hybrid strategy that routes AI tasks...