How AI Image Processing Uses ISP + NPU Together

The 5 Essential Architecture InsightsAI Image Processing Architecture in Modern…

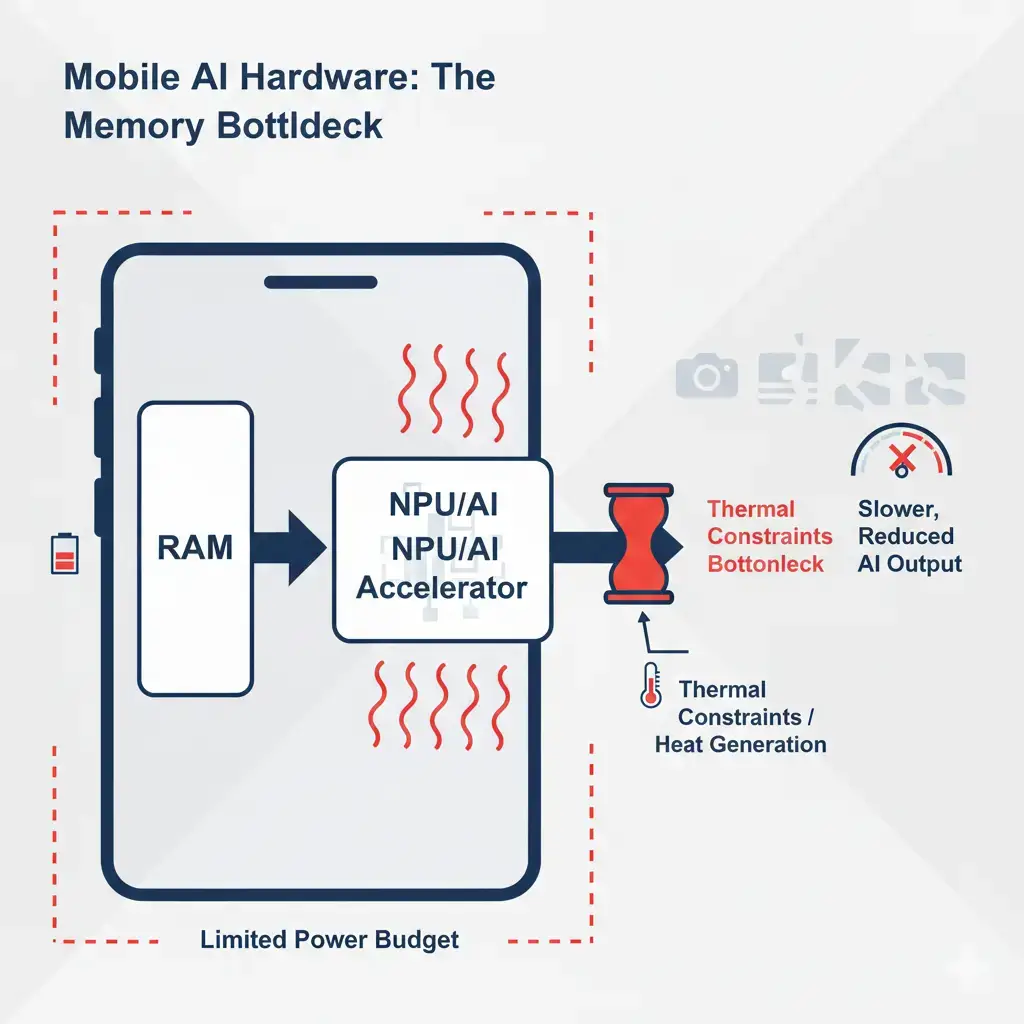

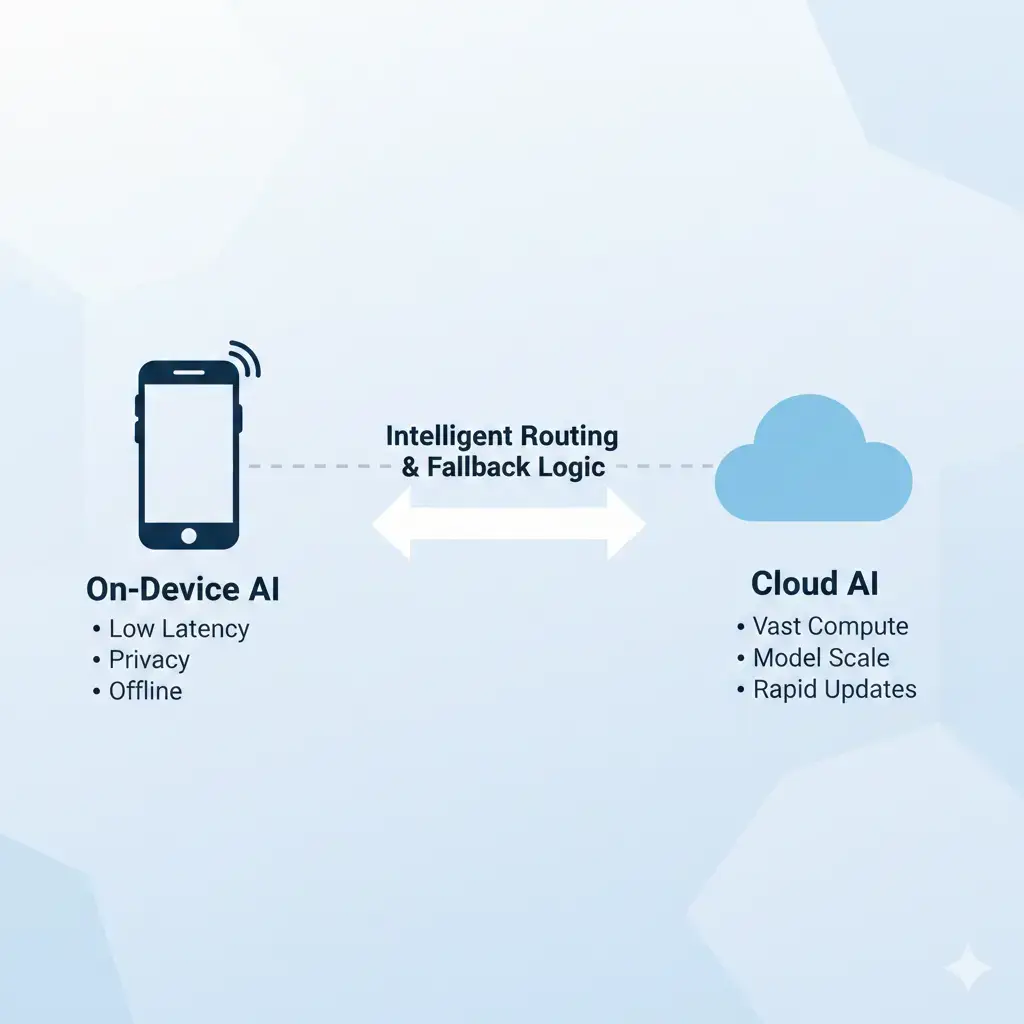

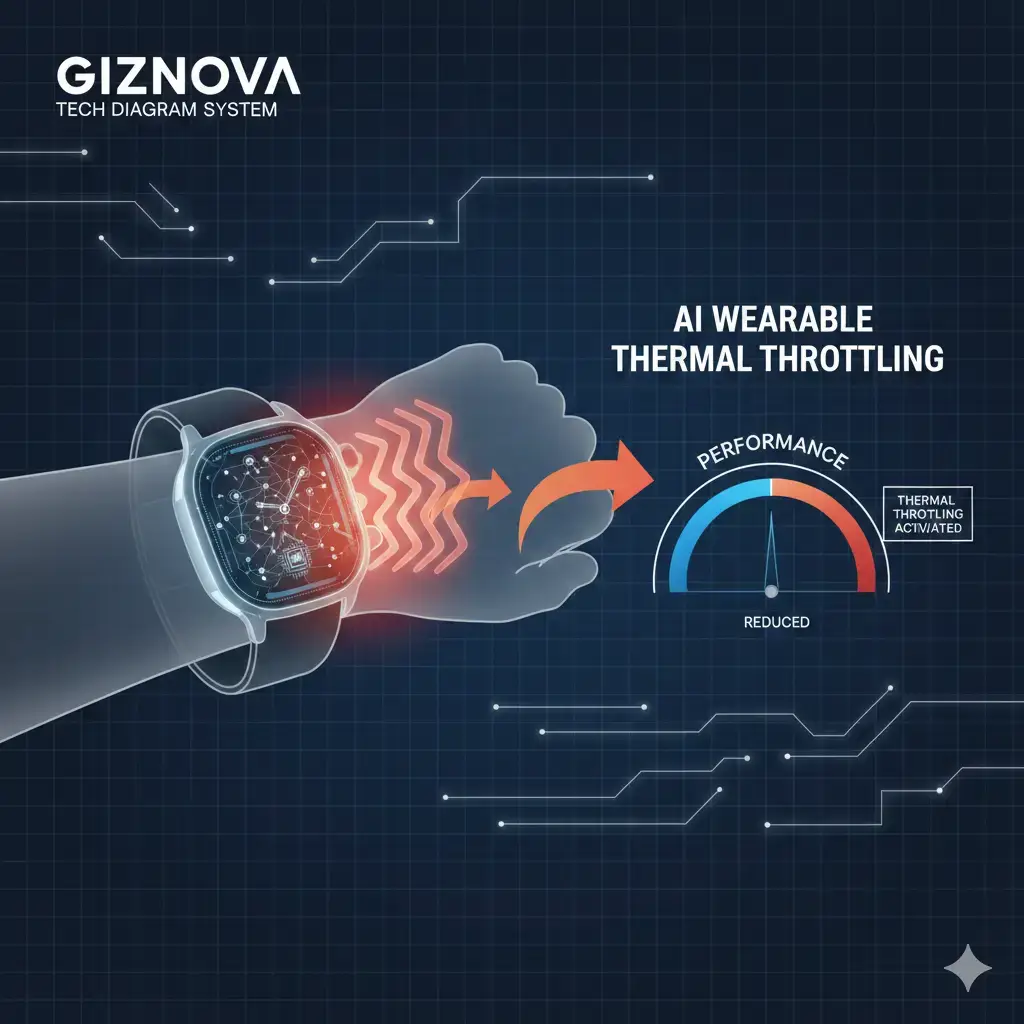

On-device AI explains how AI models run directly inside consumer devices, constrained by hardware limits such as power, thermals, memory, latency, and privacy.